Johnny DiBlasi

Light emitting diodes, plants,

LCD monitors, custom electronics, audio

2022 - ongoing

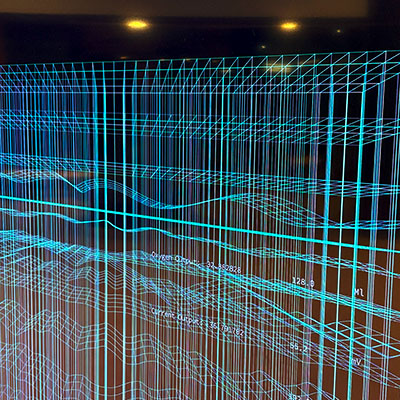

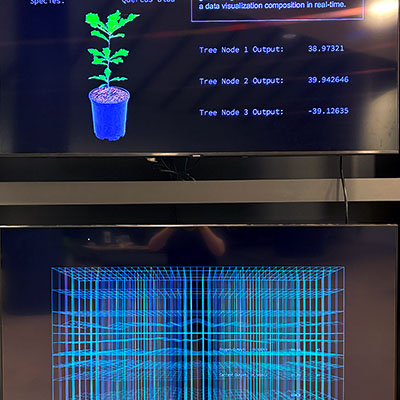

Transcoded Ecologies is a hybrid biological-technological installation featuring a bio-driven AI agent that generates various light wave frequencies for a tree sapling network while generating sound wave synthesizers along with a data visualization composition in real-time. The work creates an immersive experience where chemical and physical biproducts of an array of plants or tree saplings is measured and assisted by a machine agent that has a model of optimum environmental and light conditions. The agent builds different contexts from climate data and biological cycles from time series of days, weeks, and years representing a time frame from a pre-industrial revolution framework. Climate and chemical data is also gathered from various natural ecosystems in and around the site of the installation space in order to superimpose the data to create a model of the microclimates in that given locality. An array of ‘tree nodes’ are each placed within their own metal frames. The frames suspend a large grow lightbox over each plant, and each tree node in the network gathers biodata such as CO2, temperature, light levels (visible and infrared), and electrical current measurements that is produced by the plants.

These various internal and external datasets will be sent to a server that is downloaded, catalogued, and stored while also sending the data into the system which informs the agent’s model that control the light's intensity and color along with various computer generated soundwave frequencies or oscillators. Sensors and electrodes attached to the plants gather biodata from the grouping of plants within a controlled or fabricated micro-environment. Specifically, I am interested in reading the electrical current signals moving throughout the plant and the CO2 levels, and this data controls the installation’s system of generative light and sound that is in turn providing light and sound for the plants in a feedback loop. Additionally, a sound system within the space translates the data into projected soundwaves that fills the space with multiple channels of audio. LED strips in light boxes suspended on top of a frame serve as grow lights that generate light above the plants. As I stated, the biodata is then transcoded and interpreted into various different experiential forms such as frequencies of soundwaves, color and intensity of light, and rhythm of changing light. For the final installation piece, the work will be scaled up to include multiple 'tree nodes' which are networked together where a more nuanced and complex interaction of light and sound will evolve between the various groupings.